You may also find interesting...

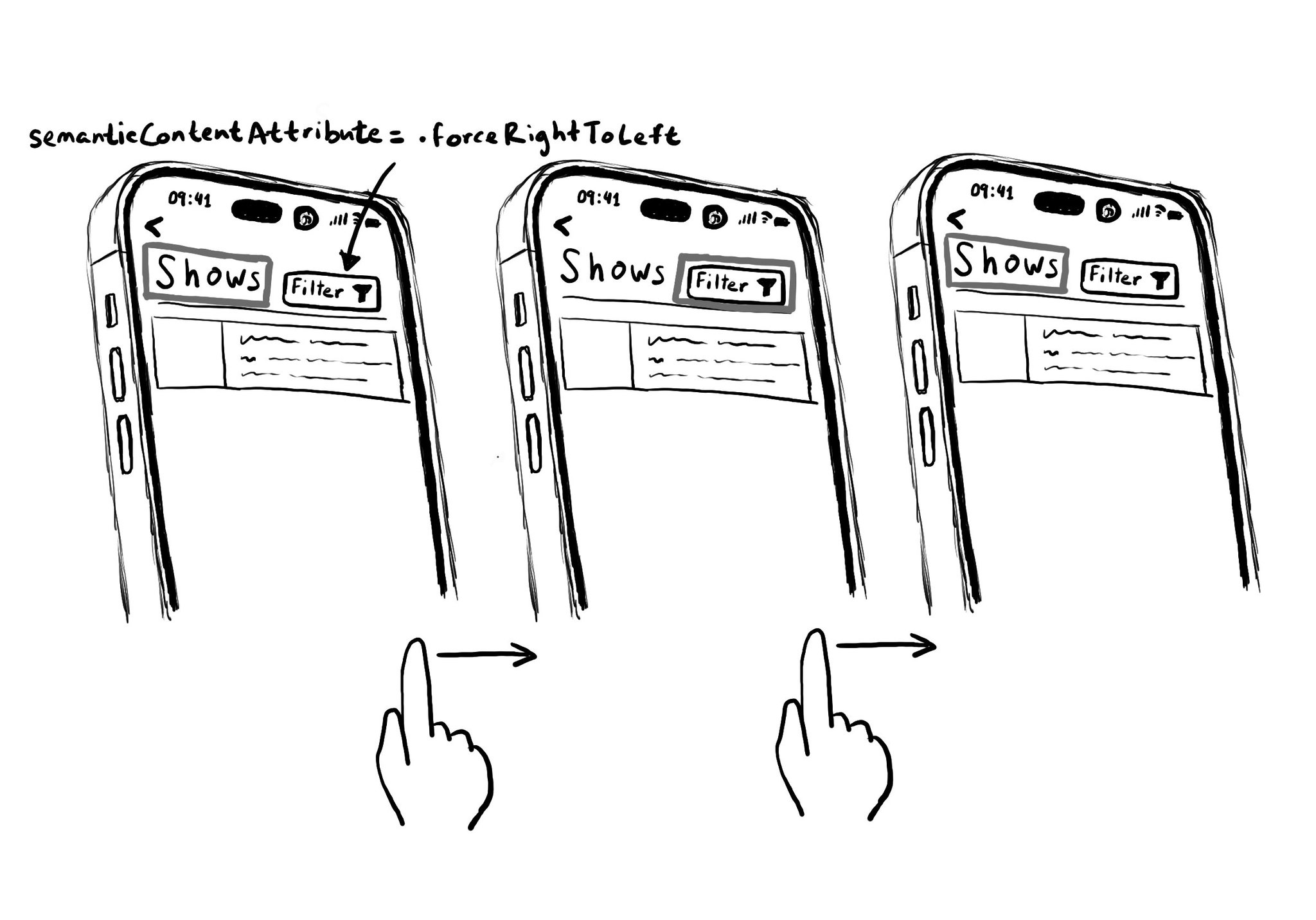

Hacks are accessibility’s worst enemy. An example. There is a ‘trick’ floating on the internet: if you want a button with an icon to the right of the text, set the semantic content attribute to force right to left. Great way to create focus traps.

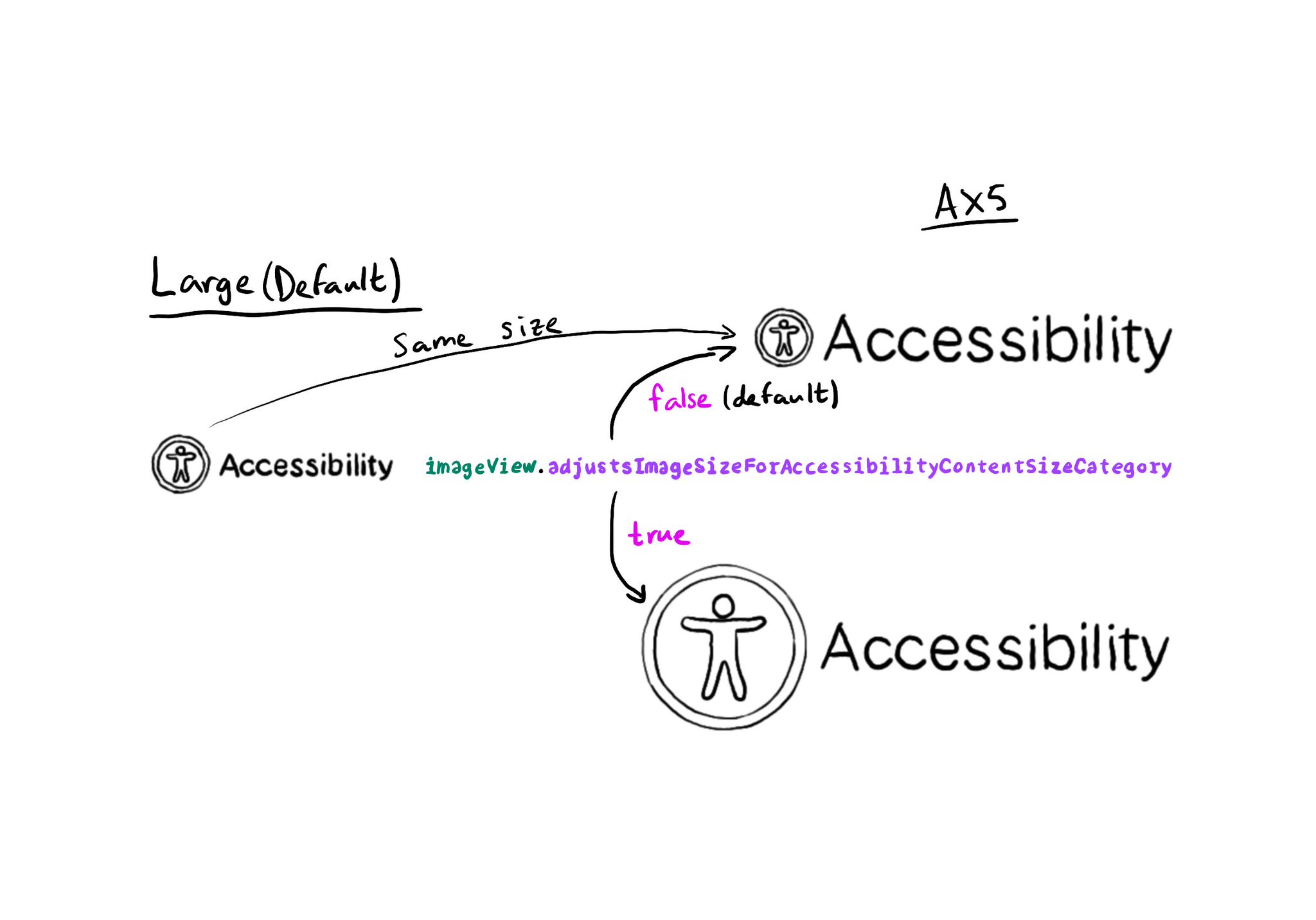

Images can automatically scale for accessibility content size categories, by setting the adjustsImageSizeForAccessibilityContentSizeCategory property to true, for any UIImageView you'd like to get its size adjusted. https://developer.apple.com/documentation/uikit/uiaccessibilitycontentsizecategoryimageadjusting/adjustsimagesizeforaccessibilitycontentsizecategory

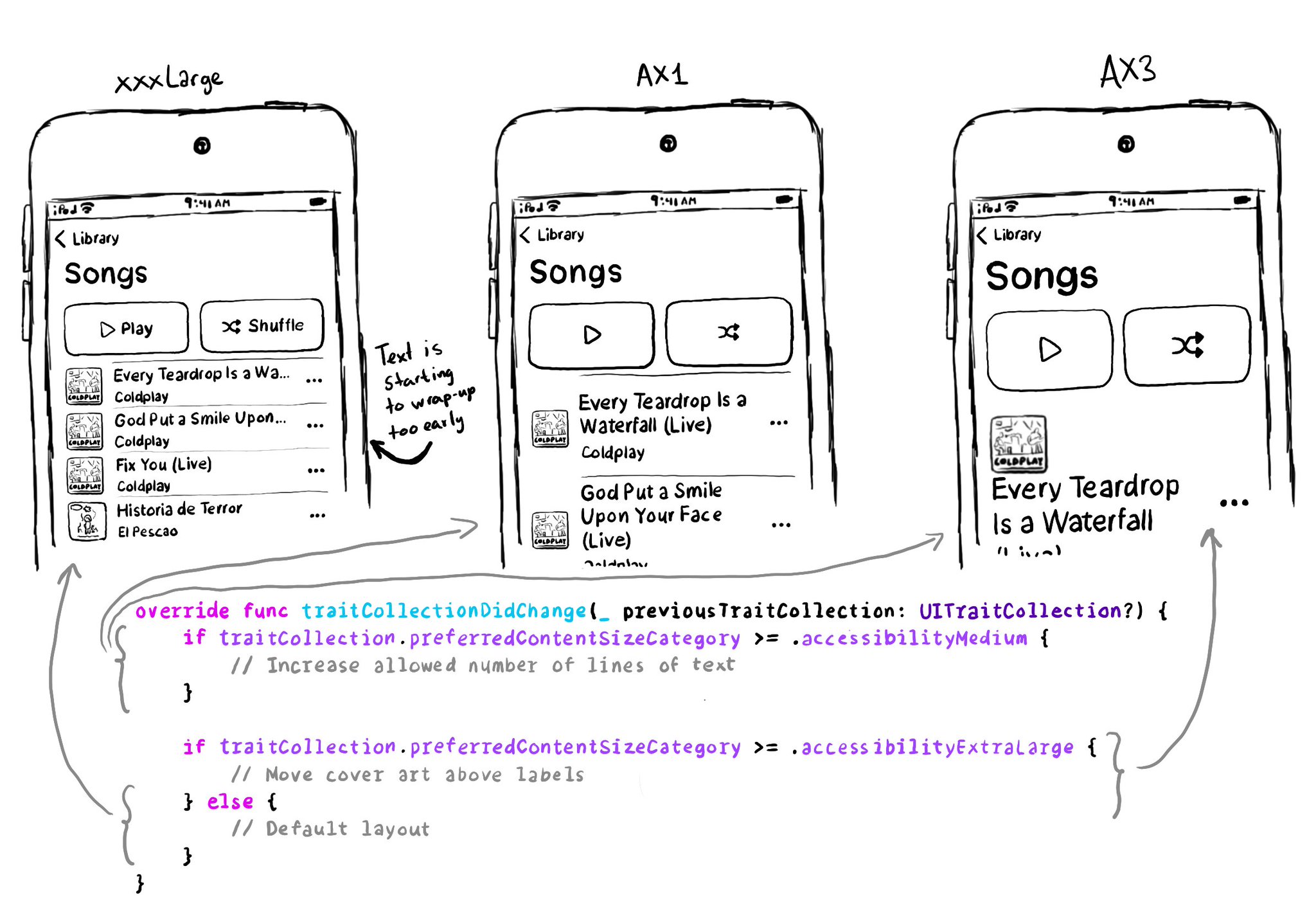

You don't have to offer an alternative layout just for the accessibility category. You can actually compare content size categories. So you could tweak the UI already for anything equal to or larger than .extraExtraLarge, for example.

Content © Daniel Devesa Derksen-Staats — Accessibility up to 11!