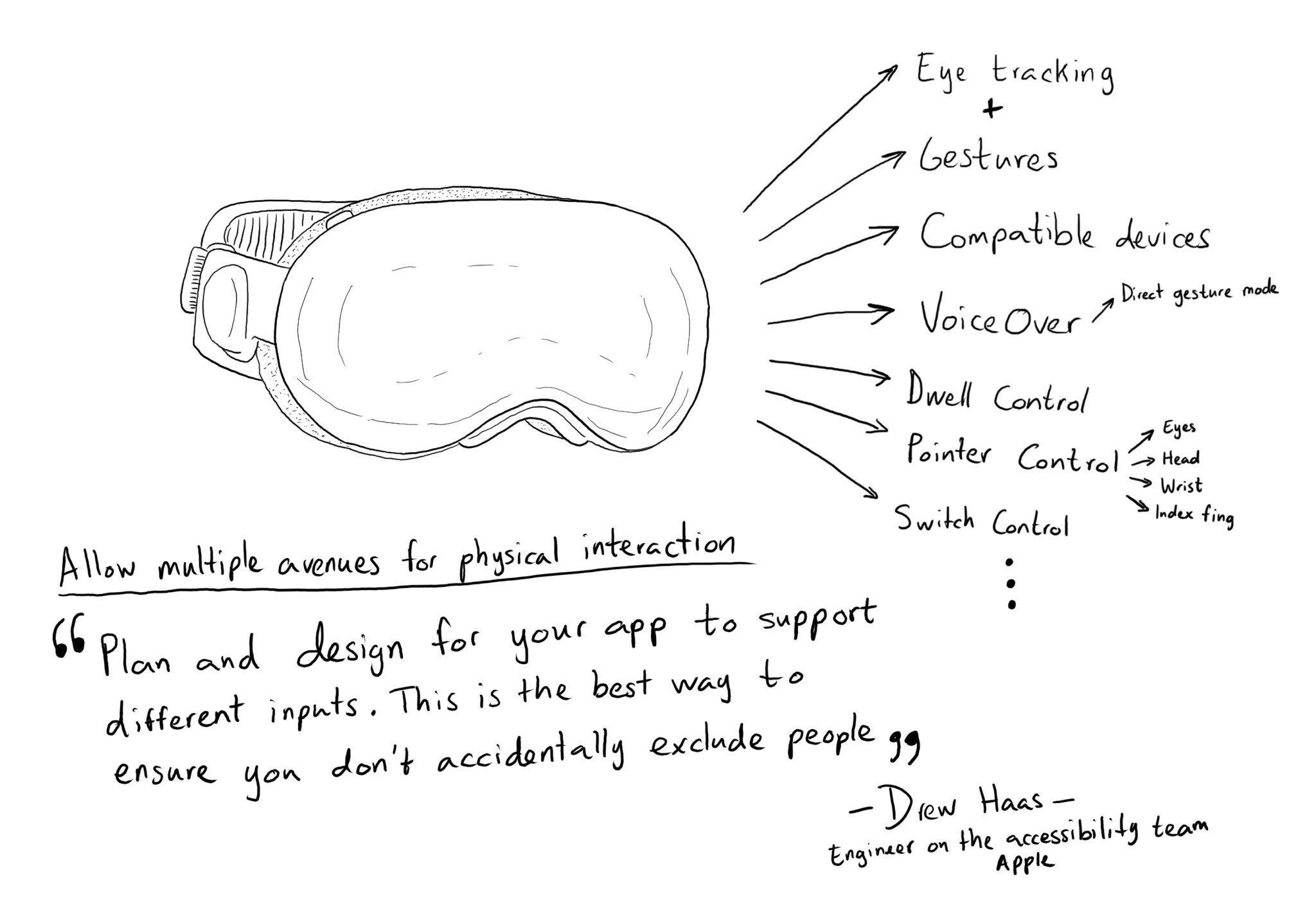

Abstracting your interface in a way that can offer multiple input and output mechanisms is key when developing software with an accessibility mindset. Apple has brought this to the next level in visionOS.

Create accessible spatial experiences

Abstracting your interface in a way that can offer multiple input and output mechanisms is key when developing software with an accessibility mindset. Apple has brought this to the next level in visionOS.

Create accessible spatial experiences

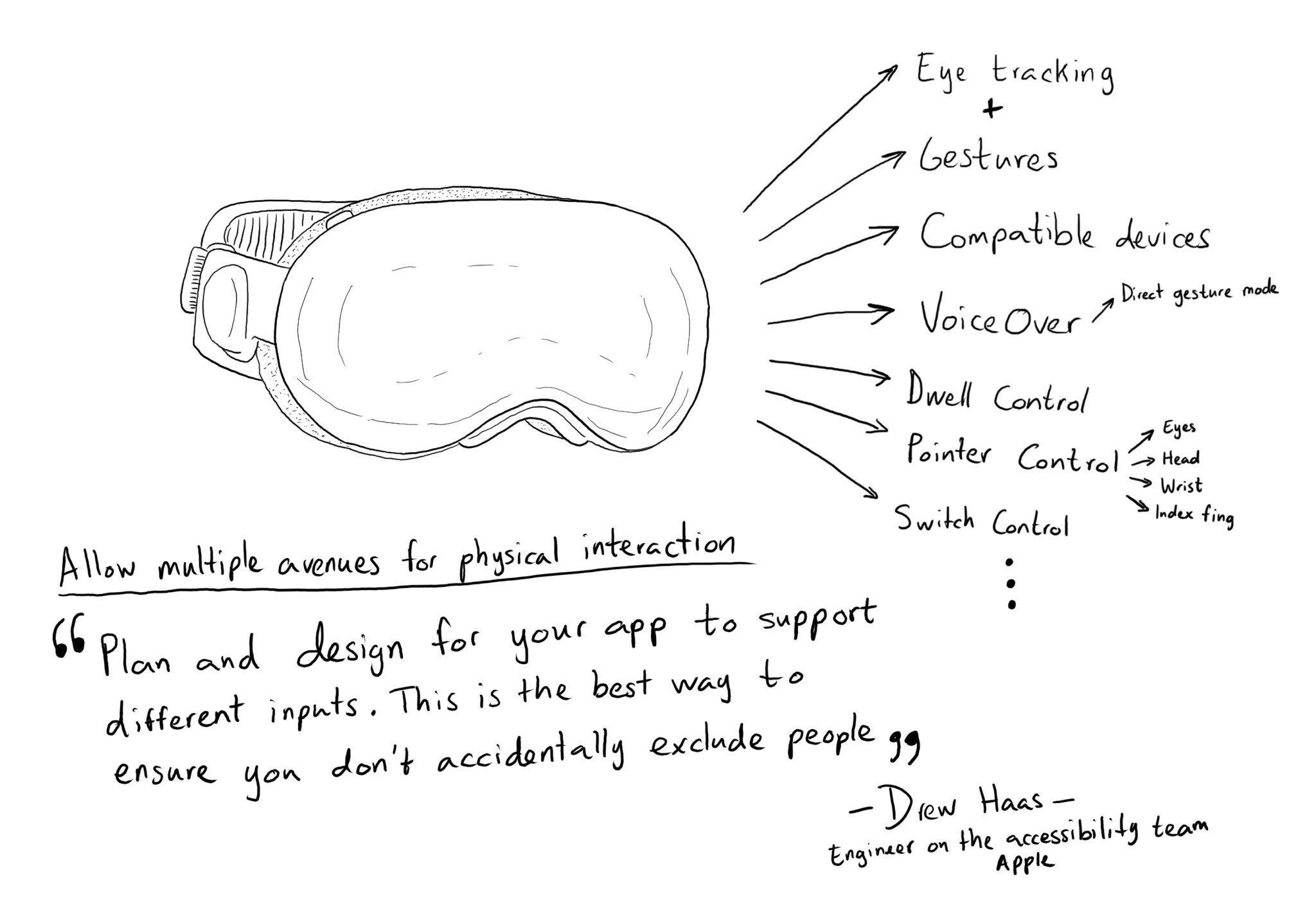

All the accessibility capabilities you can check for, have counterpart notification names you can observe in case the user changes its preferences while using your app. https://x.com/dadederk/status/1577435144129892352

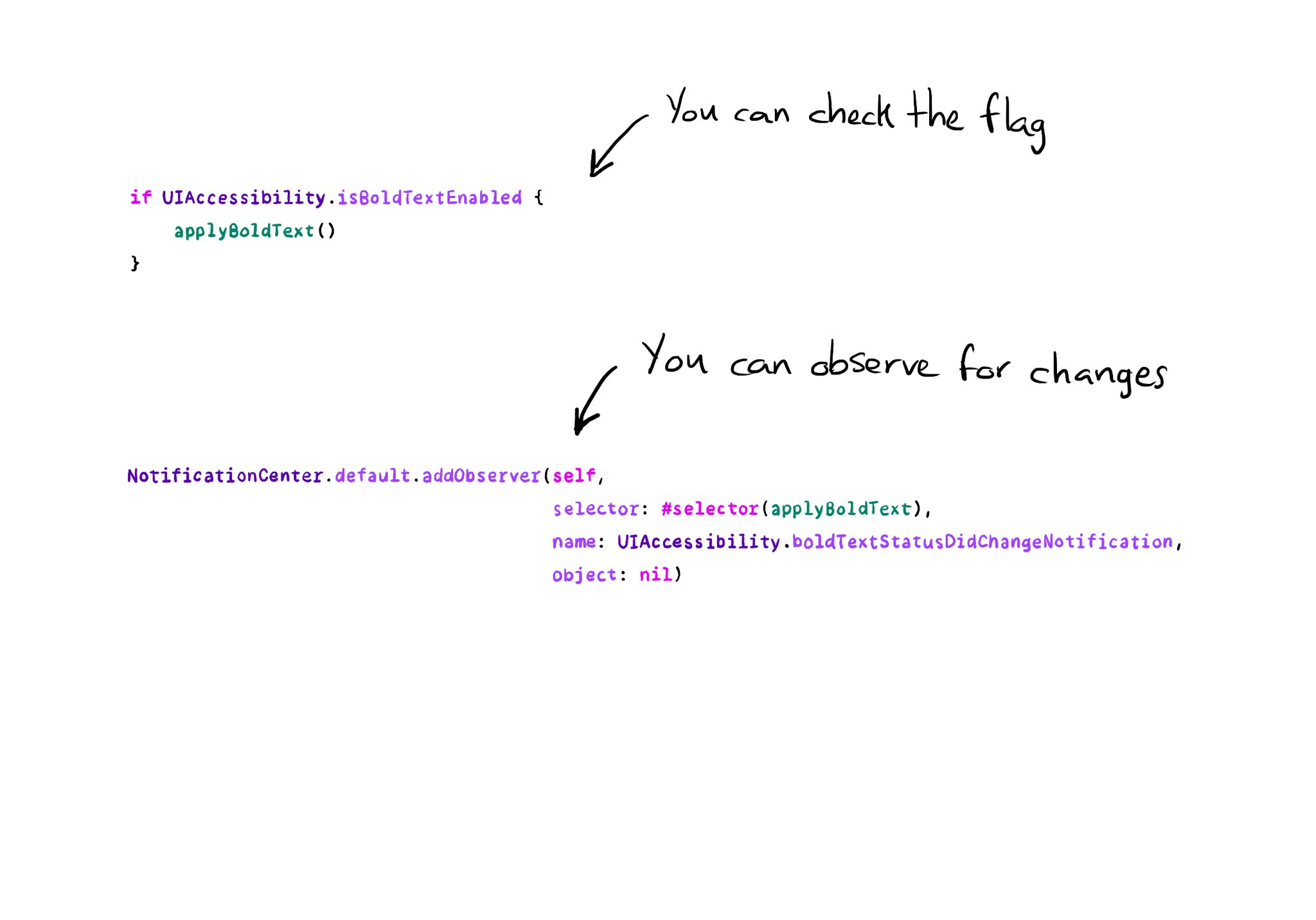

You can add your Accessibility Shortcuts to Control Centre too. One more quick access point and one more reminder to get you testing often and quickly. How to enable Accessibility shortcuts: https://x.com/dadederk/status/1583519154165800960?s=61&t=_fK9Muzu2MyFEeJLVQZcJg

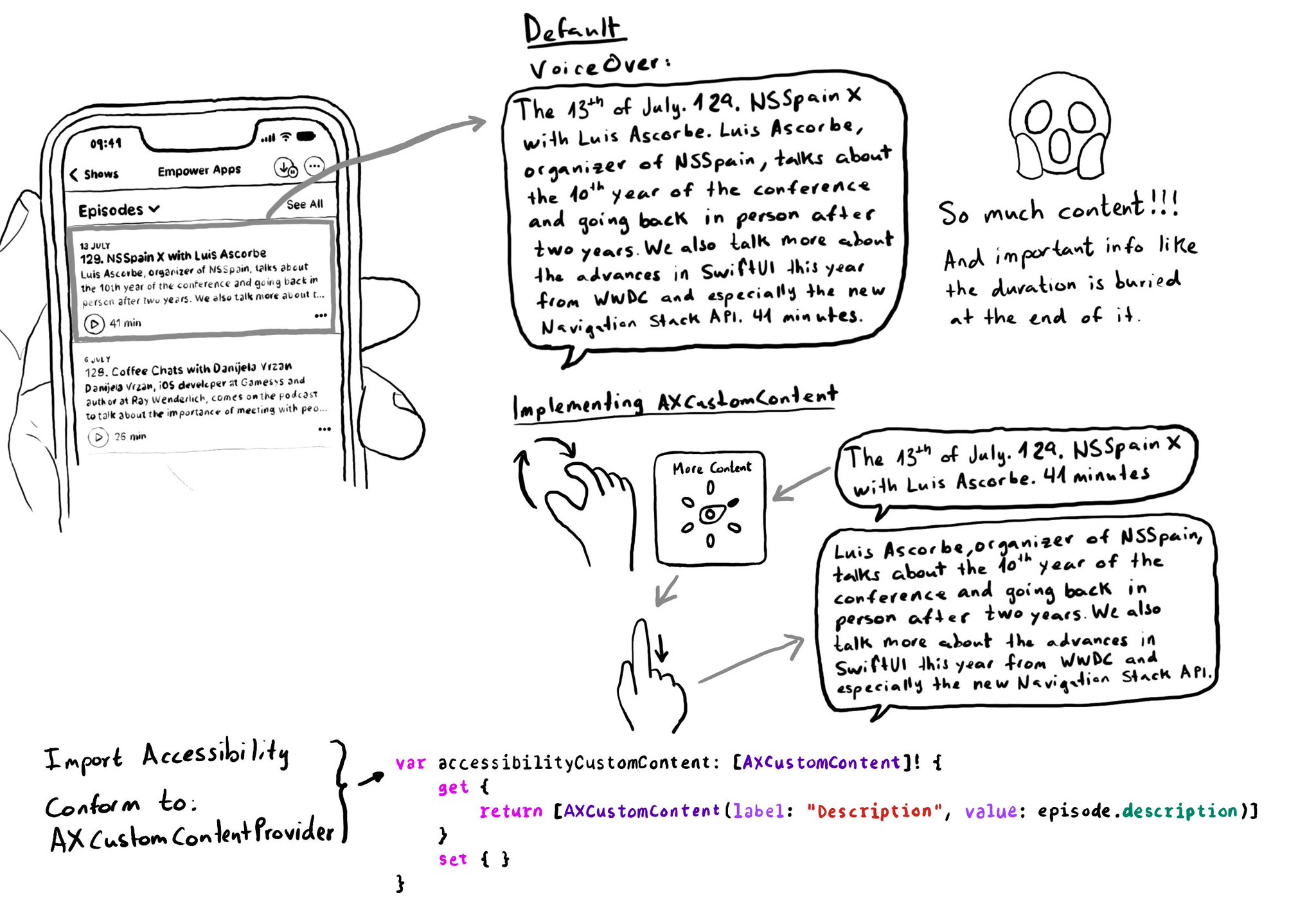

Too much data can overwhelm users. Very little is an incomplete experience. It is hard to find a balance on verbosity and the users may have different preferences. To help with this issue, the AXCustomContent APIs let you mark data as optional.

Content © Daniel Devesa Derksen-Staats — Accessibility up to 11!