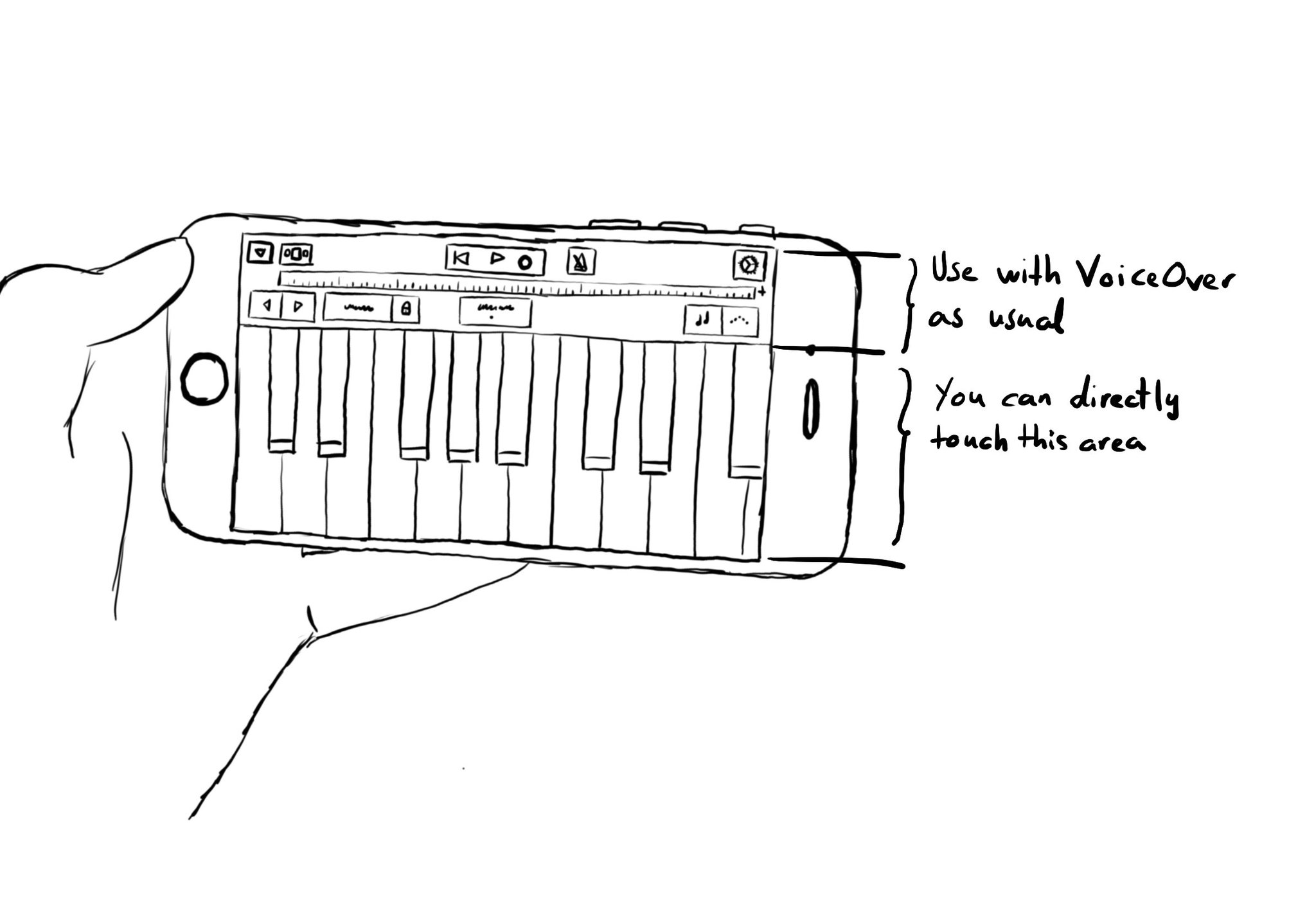

Imagine playing a piano with VoiceOver. You'd have to find the key you want to play and then double tap. It would be a very difficult experience. With the .allowsDirectInteraction accessibility trait, VoiceOver passes through touch gestures.

Use carefully! And only when it really makes sense to be able to handle controls directly with touch. Other examples could be a drawing app or some games.