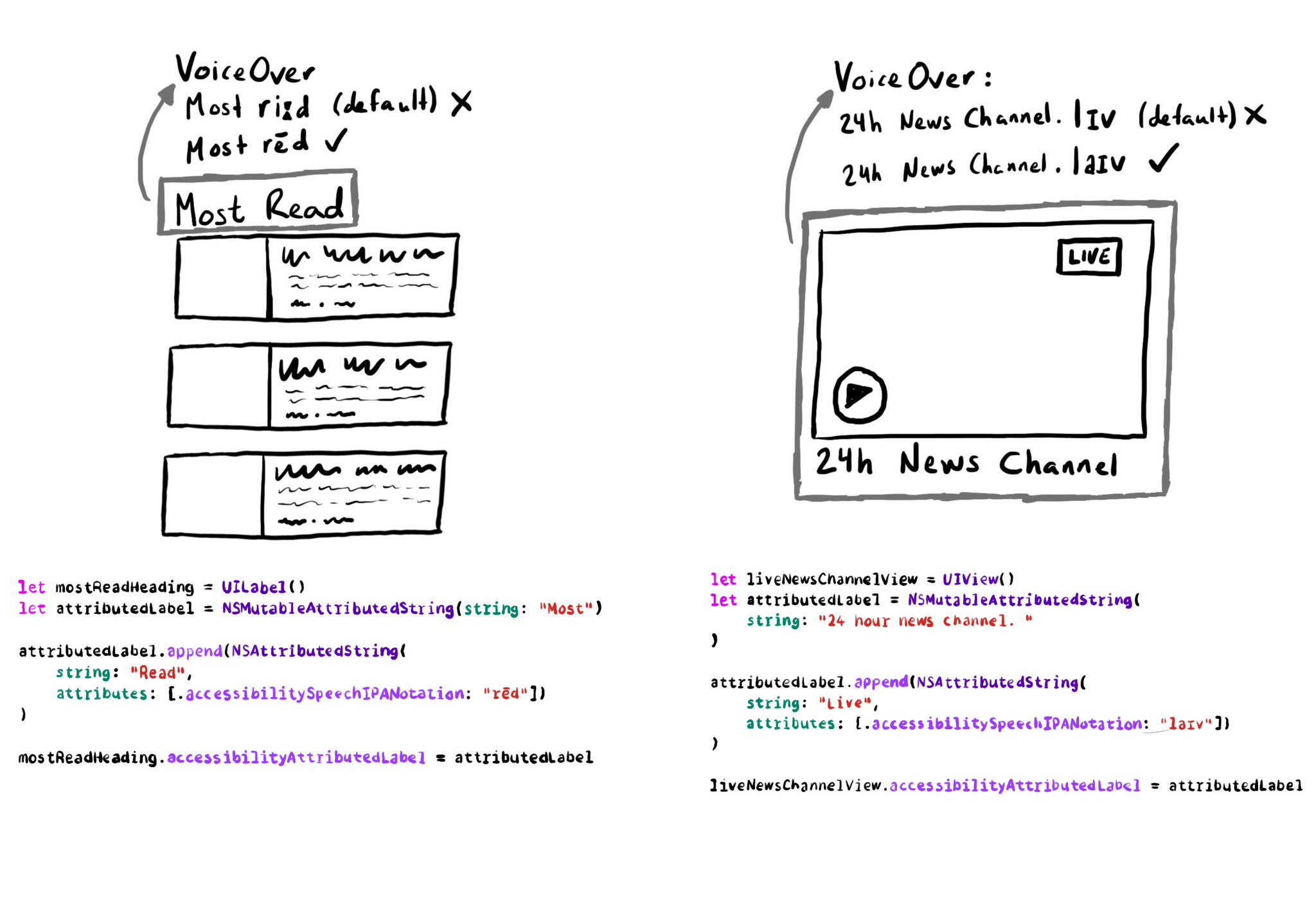

accessibilitySpeechIPANotation is sometimes handy in English where a word is spelled the same but pronounced differently depending of the context. Some examples are: live, read... Or you may want to correct how VoiceOver pronounces your app's name!

let liveNewsChannelView = UIView()

let attributedLabel = NSMutableAttributedString(string: "24 hour news channel. ")

attributedLabel.append(NSAttributedString(string: "Live",attributes: [.accessibilitySpeechIPANotation: "laɪv"]))

liveNewsChannelView.accessibilityAttributedLabel = attributedLabel