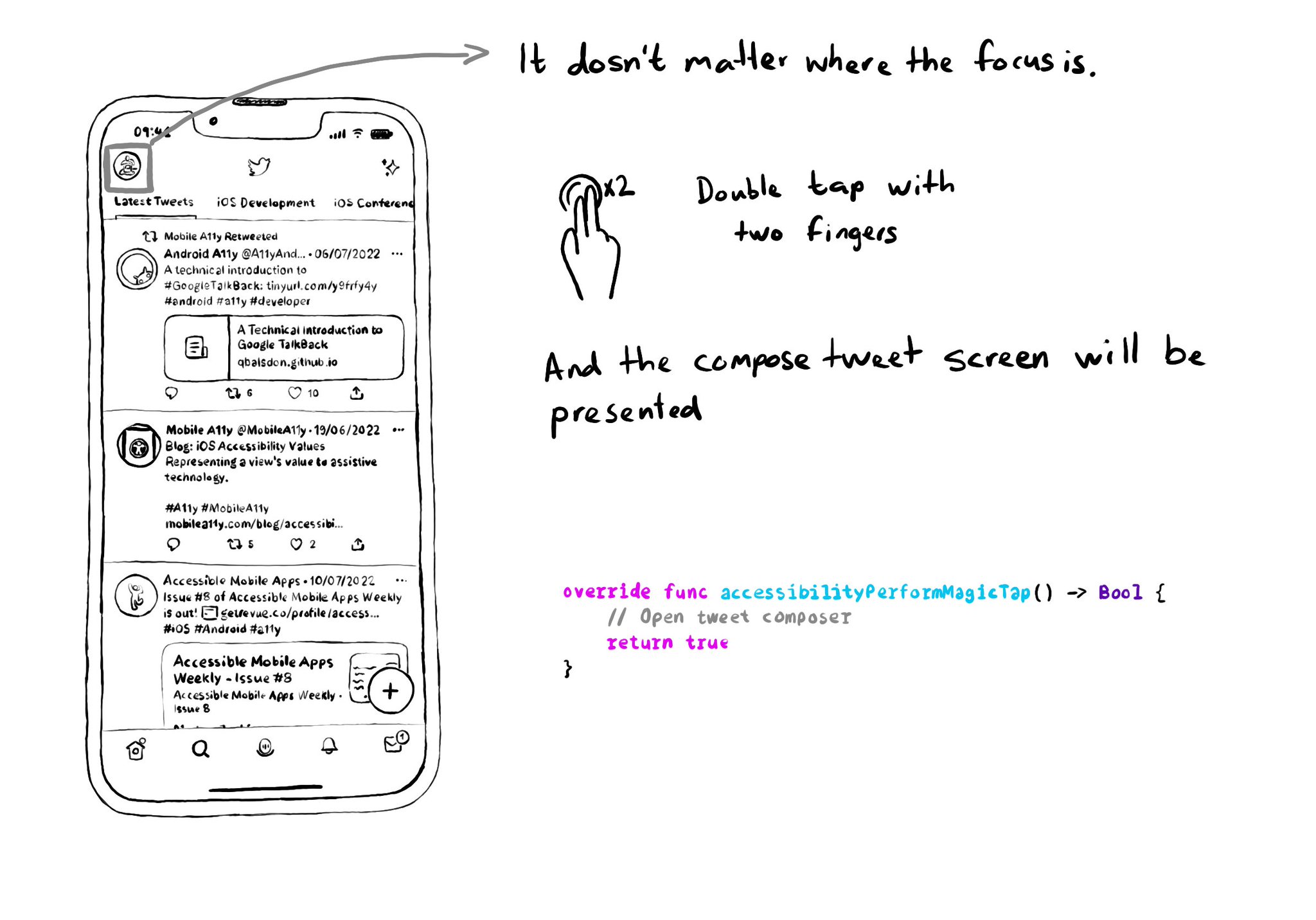

VoiceOver has a very cool gesture called the Magic Tap (double tap with two fingers). It should execute the most important task for the current state of the app. Examples: start/stop timer, play/pause music, take a photo, compose a tweet...

You just need to override accessibilityPerformMagicTap() to capture that gesture, execute the desired code, and return true if handled successfully.