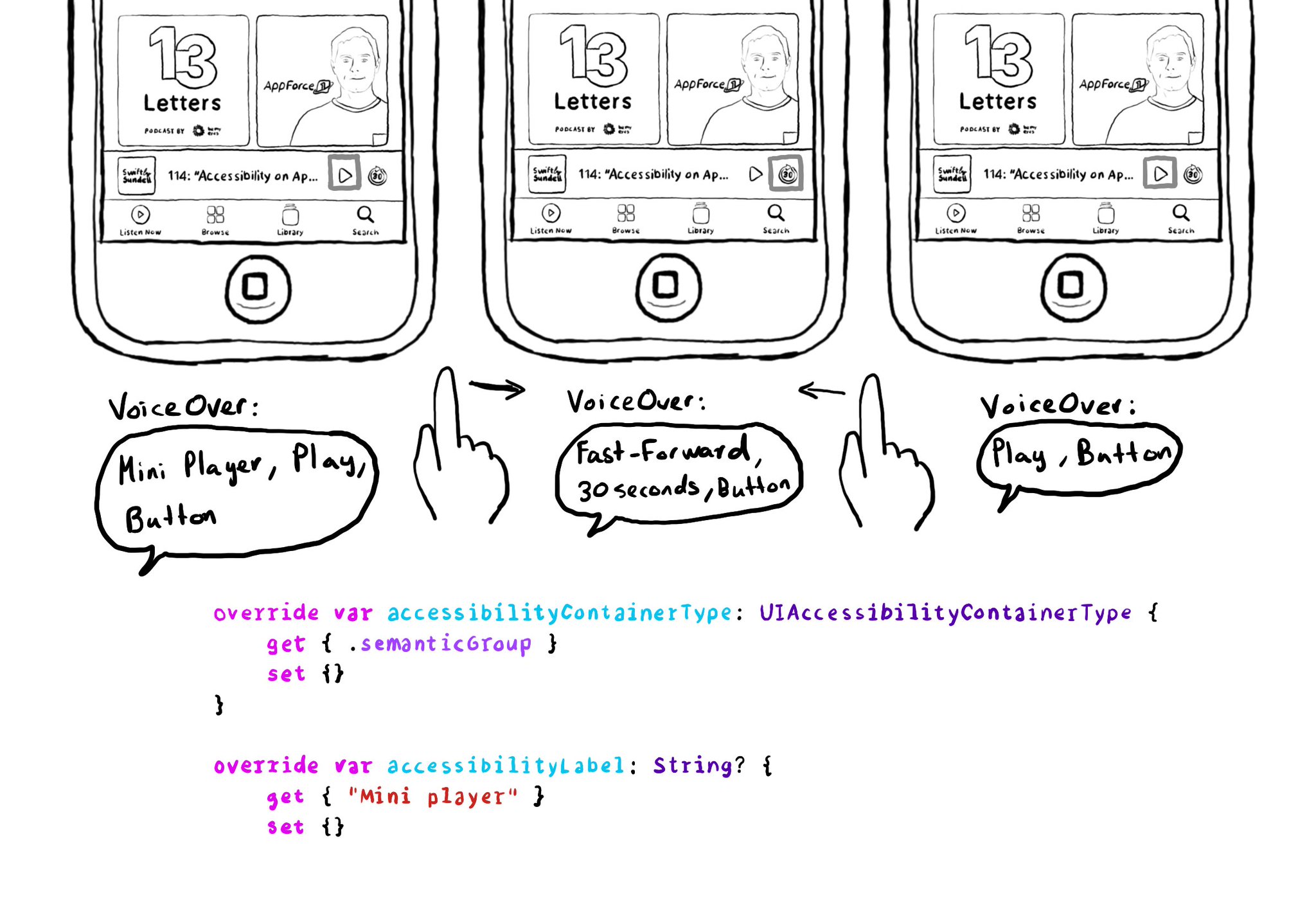

Have you noticed that the first time you select an element on Apple Podcast's mini player, VoiceOver says "Mini player", and then, it describes the selected element? It gives the user more context on what "feature" those elements belong to.

This can be achieved in UIKit by configuring the accessibility container type of the mini player with .semanticGroup and giving it an accessibility label, in this case: "Mini player".

https://developer.apple.com/documentation/objectivec/nsobject-swift.class/accessibilitycontainertype

https://developer.apple.com/documentation/uikit/uiaccessibilitycontainertype/semanticgroup