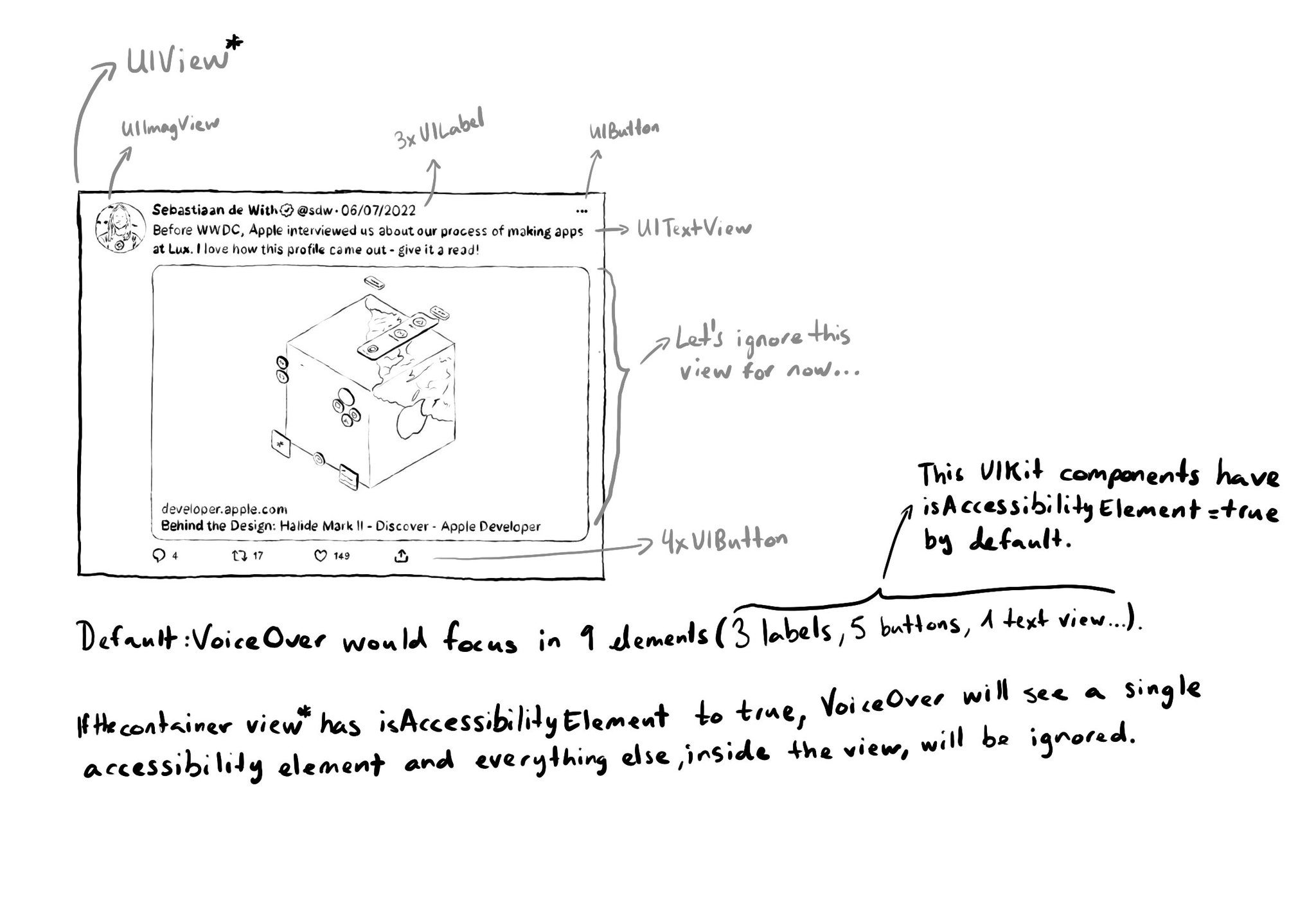

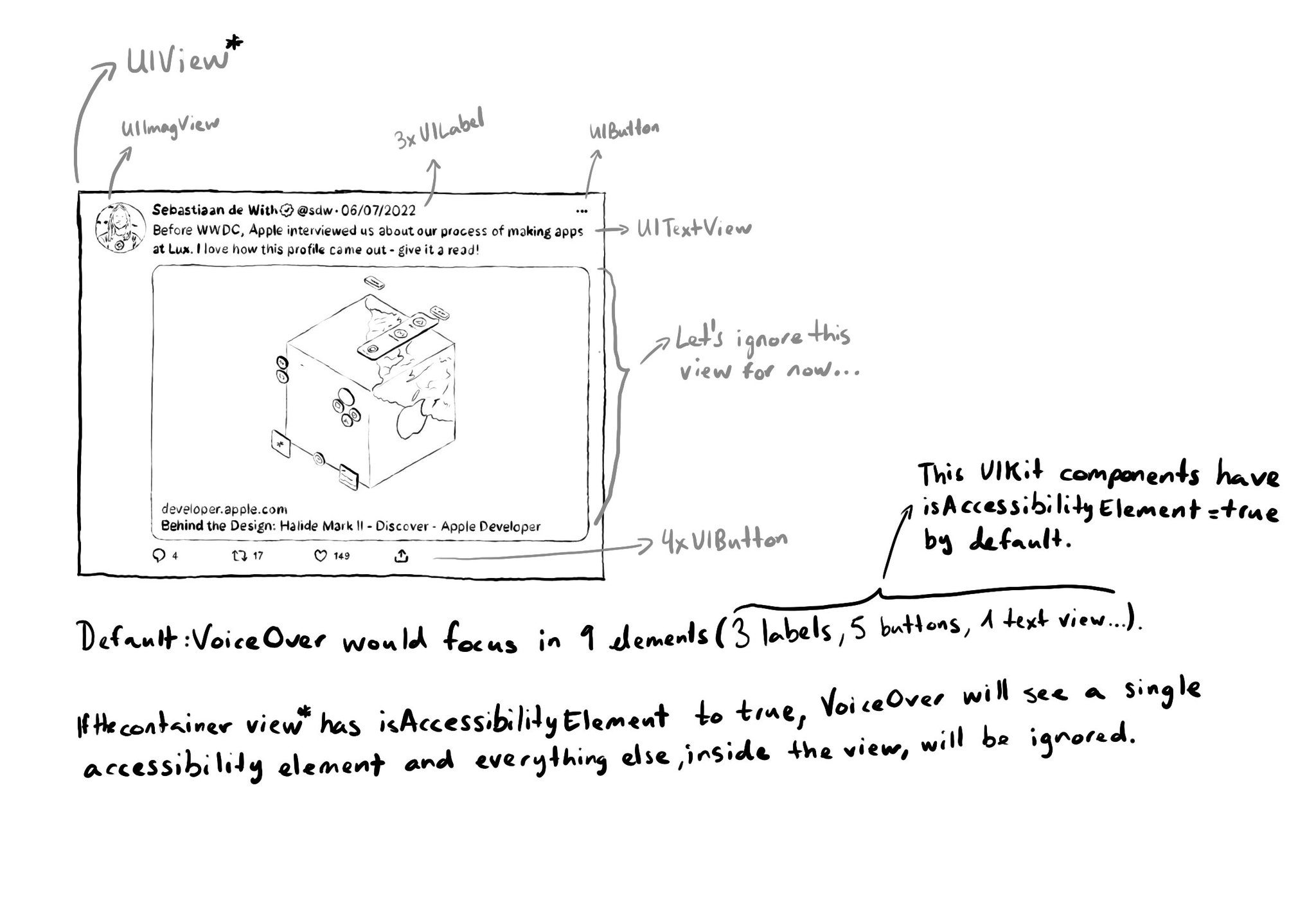

When setting isAccessibilityElement to true, assistive tech like VoiceOver will stop looking for other accessible elements in that view hierarchy. So if we make a view accessible, its subviews, including buttons and labels won't be accessible.

When setting isAccessibilityElement to true, assistive tech like VoiceOver will stop looking for other accessible elements in that view hierarchy. So if we make a view accessible, its subviews, including buttons and labels won't be accessible.

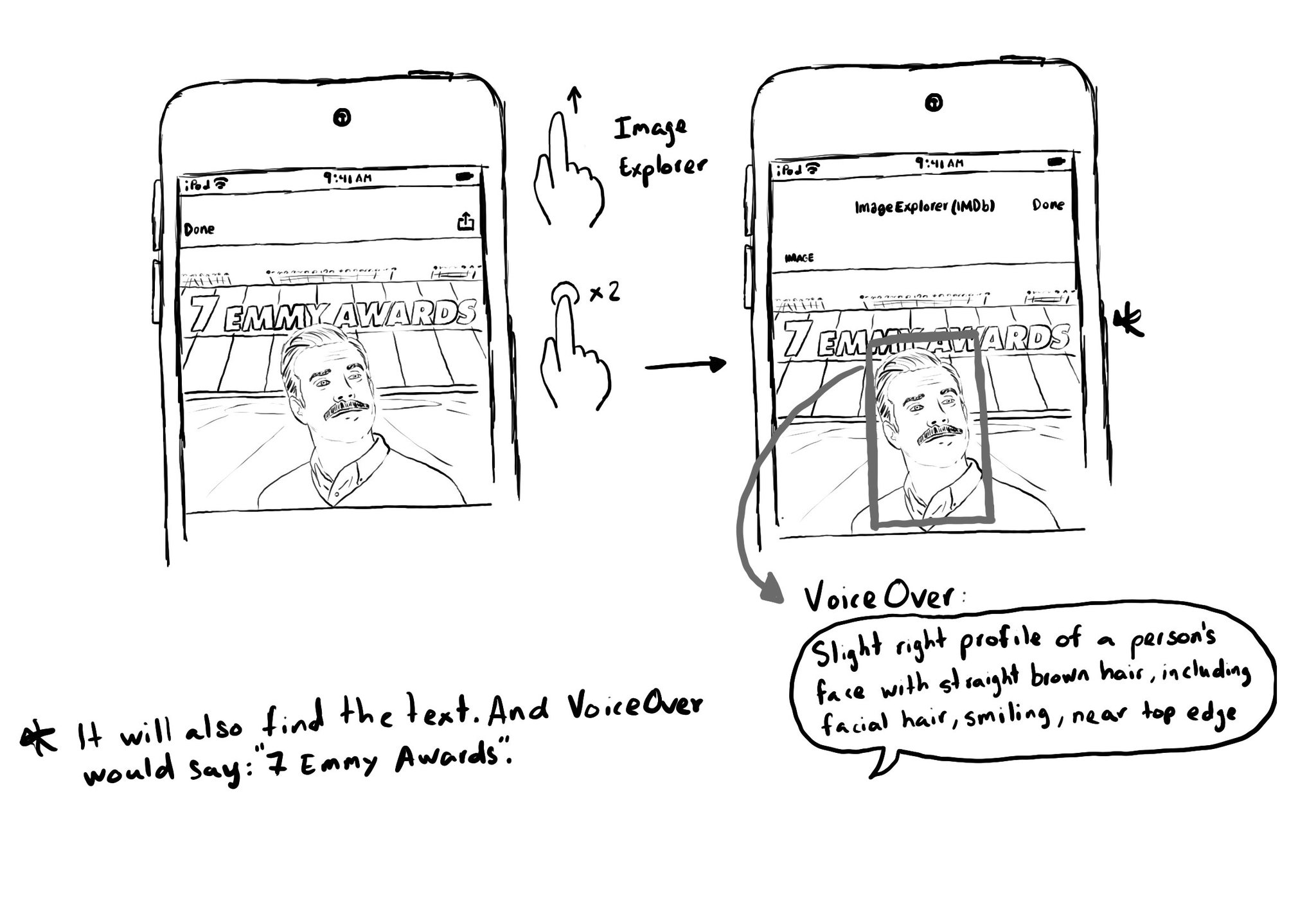

Images that convey important information should have the .image accessibility trait and provide an alternative text in the accessibility label. "Image" will be added to VoiceOver's utterance and the user will be able to use Image Explorer. Image Explorer is fairly new, introduced just a couple years ago. But if you were appropriately configuring the image trait, users suddenly got this new functionality for free. Isn't that awesome? With VoiceOver on, open Image Explorer by swiping up in an image and double tapping. It lets users find people (with a basic description and positioning in the photo), objects or text in images, using on-device intelligence. It is very cool!

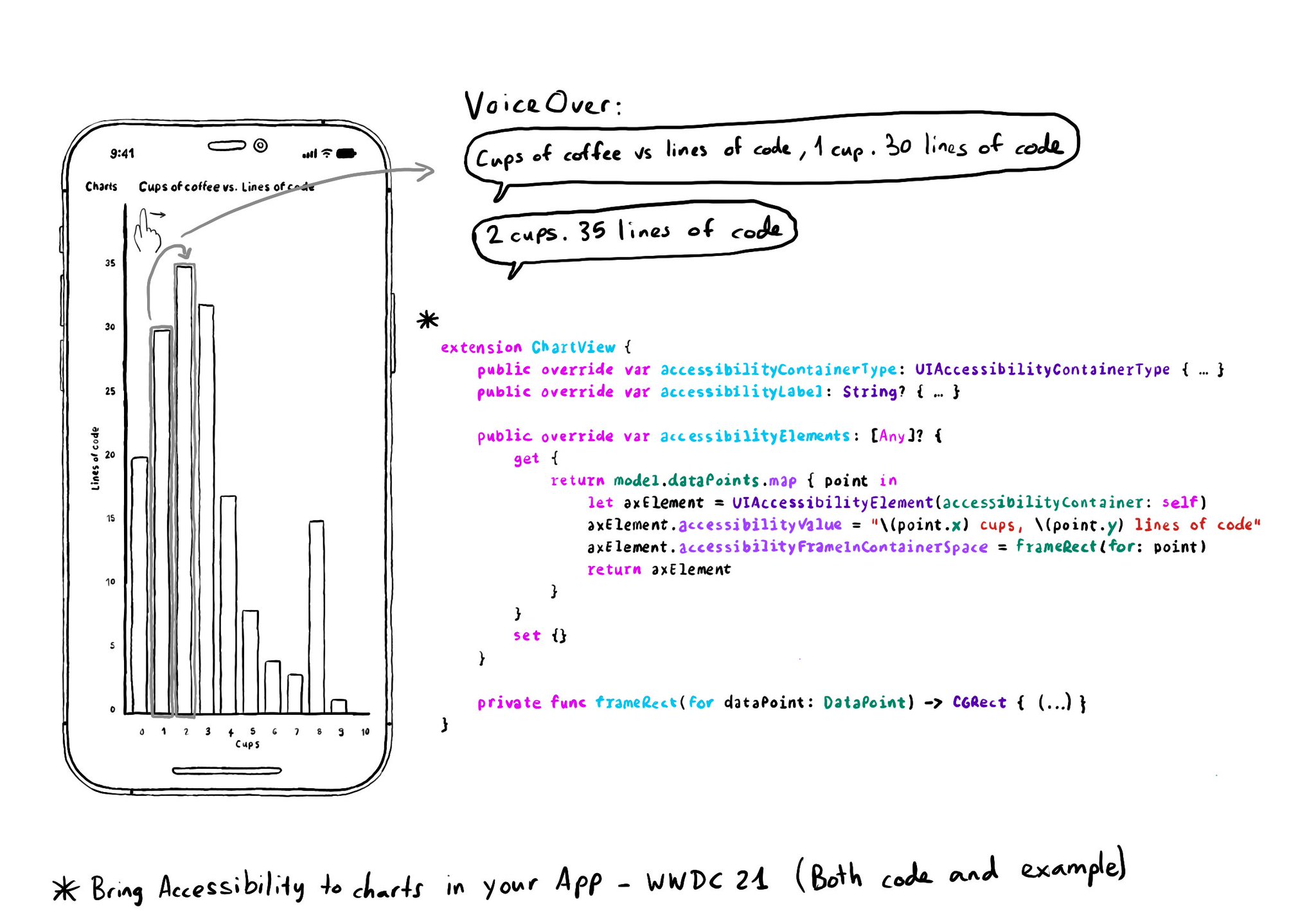

Creating UIAccessibilityElements, combined with a semanticGroup accessibilityContainerType, can also help you make components as complex as charts accessible. Example from "Bring Accessibility to Charts" WWDC21: https://developer.apple.com/videos/play/wwdc2021/10122/

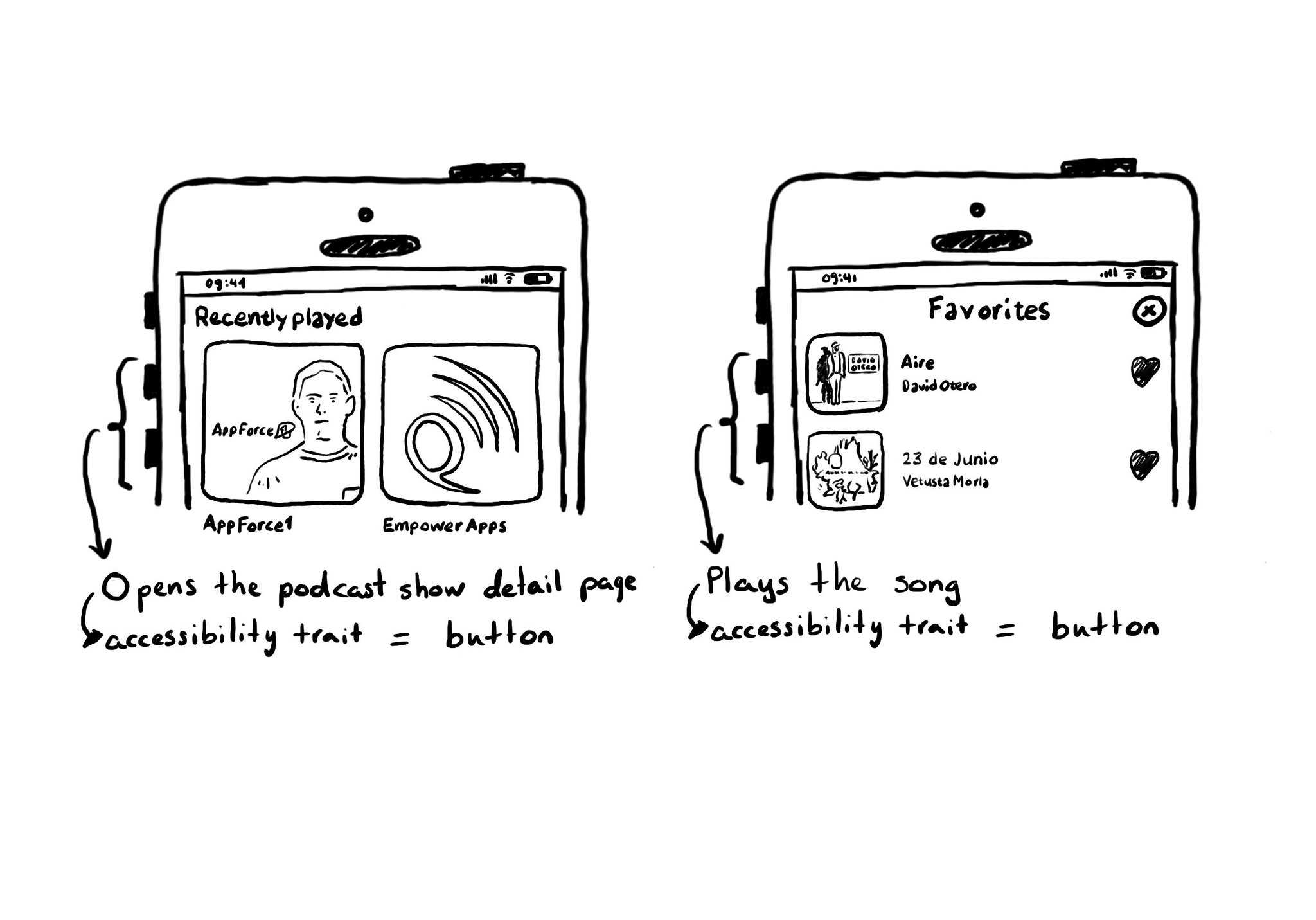

A common example where you need to manually configure the button accessibility trait is for some table/collection view cells. These tend to be “buttons” that perform an action, like playing music, or bring the user to a different screen.

Content © Daniel Devesa Derksen-Staats — Accessibility up to 11!