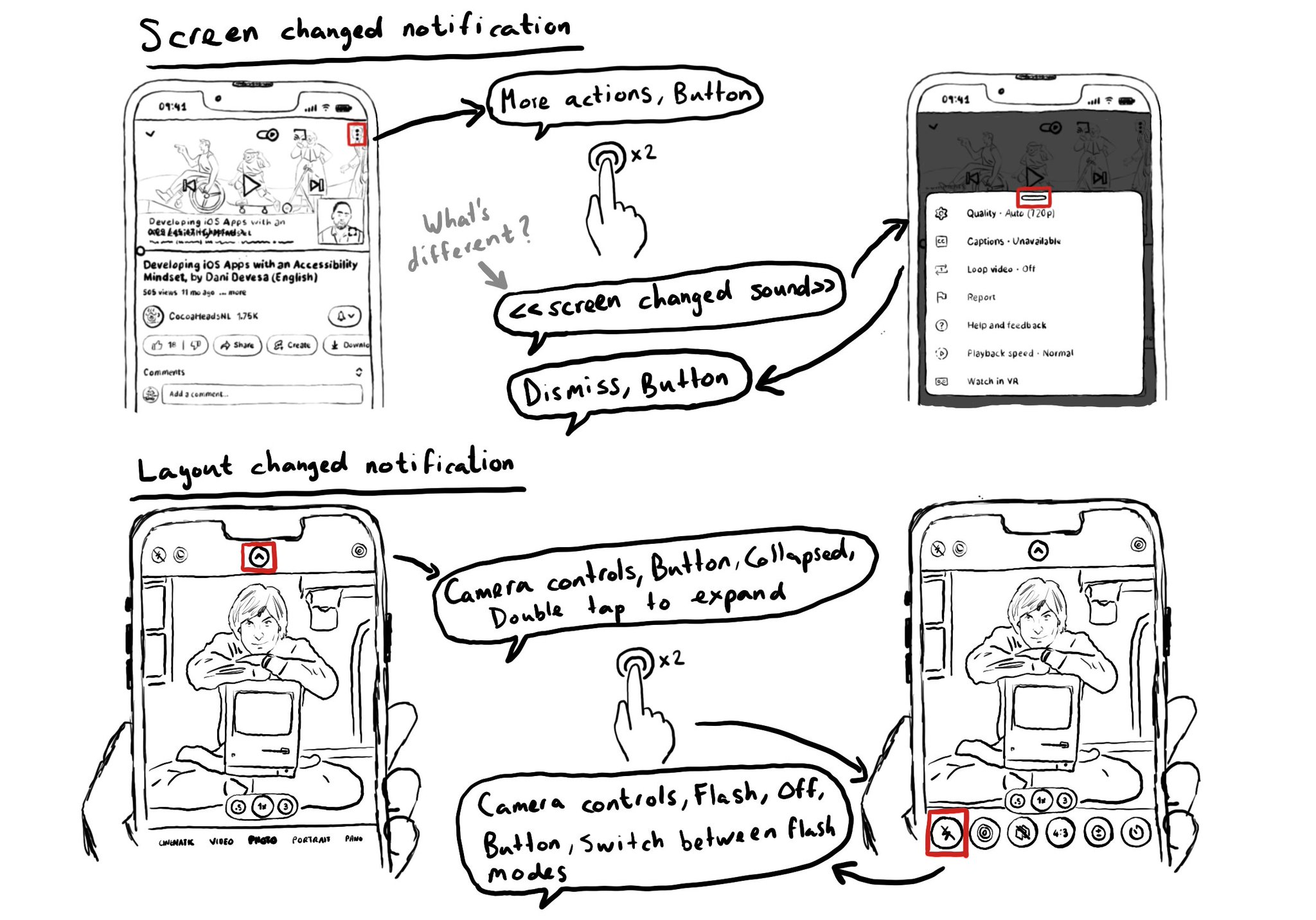

If both screenChanged and layoutChanged notifications signal changes in the UI and allow you to move VoiceOver's focus somewhere else, what's the difference? To the user, screen changed plays a sound indicating them they got moved to a new screen.

If both screenChanged and layoutChanged notifications signal changes in the UI and allow you to move VoiceOver's focus somewhere else, what's the difference? To the user, screen changed plays a sound indicating them they got moved to a new screen.

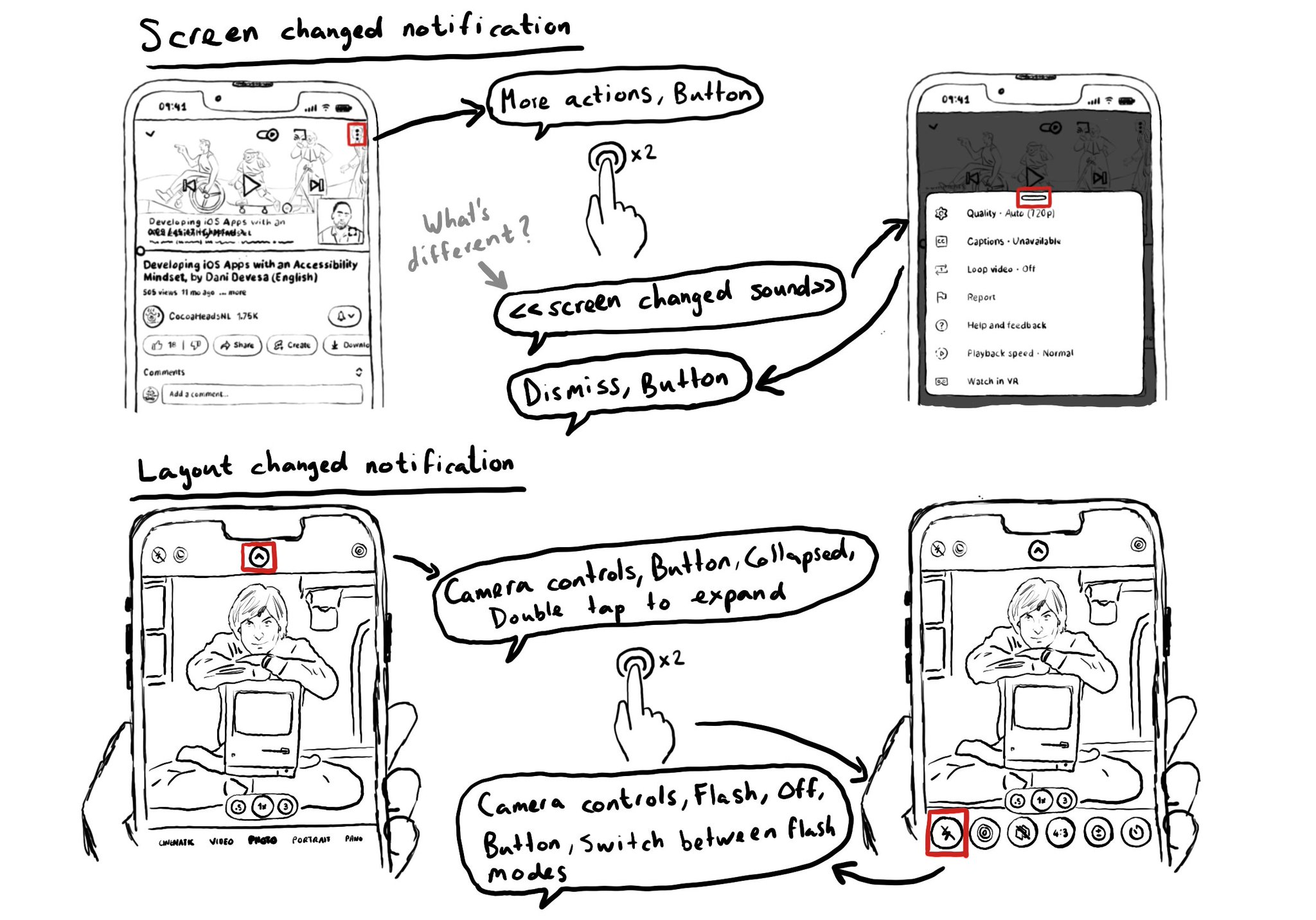

With the attribute accessibilitySpeechPunctuation, you can ask VoiceOver to speak any punctuation marks in your attributed accessibility label, if that is what you want. Good for code snippets?

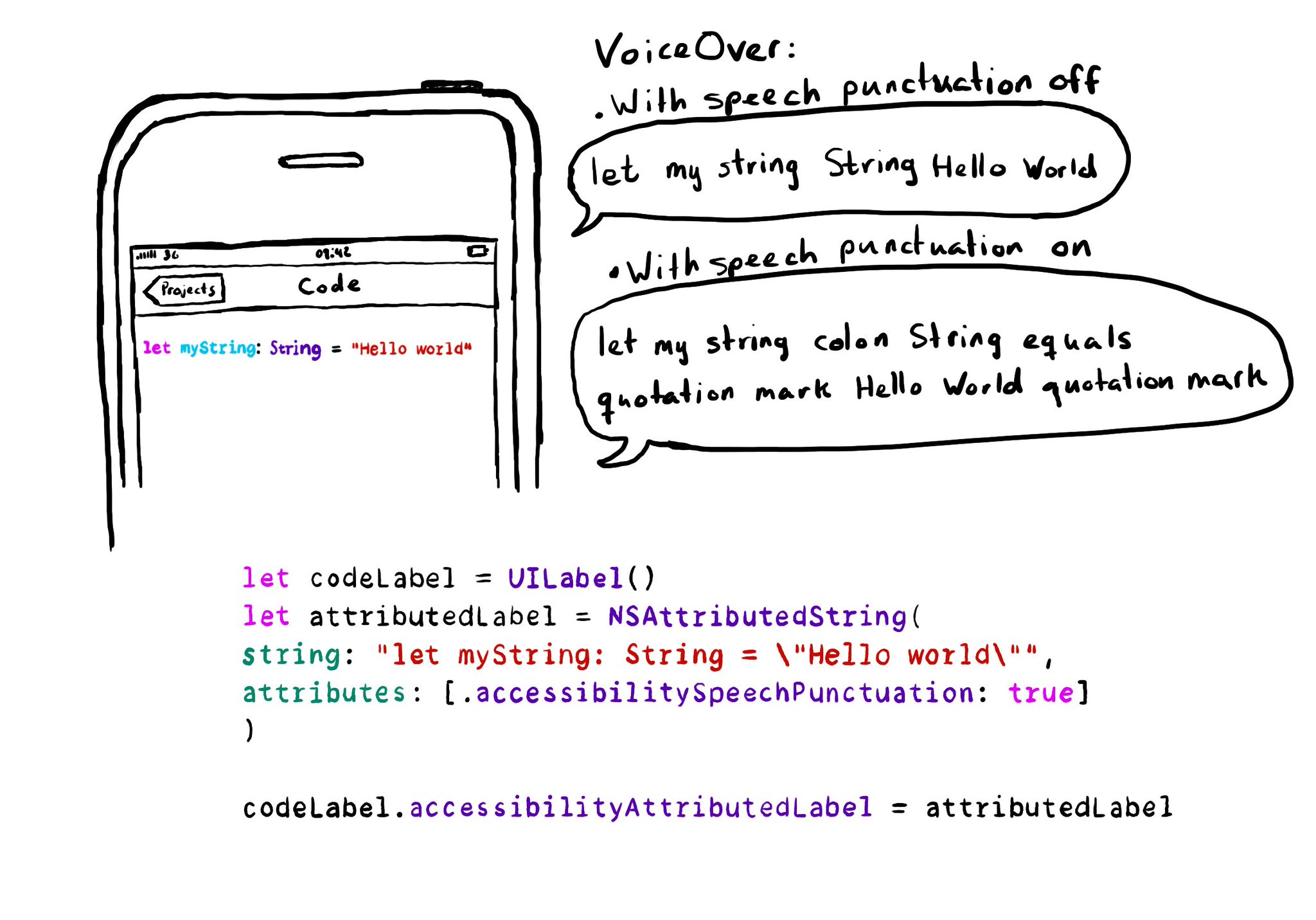

Toggles or UISwitches are often found separated from the label that precedes (and describes) them; with an unclear label; missing a value, trait, or hint; or even not being actionable at all.

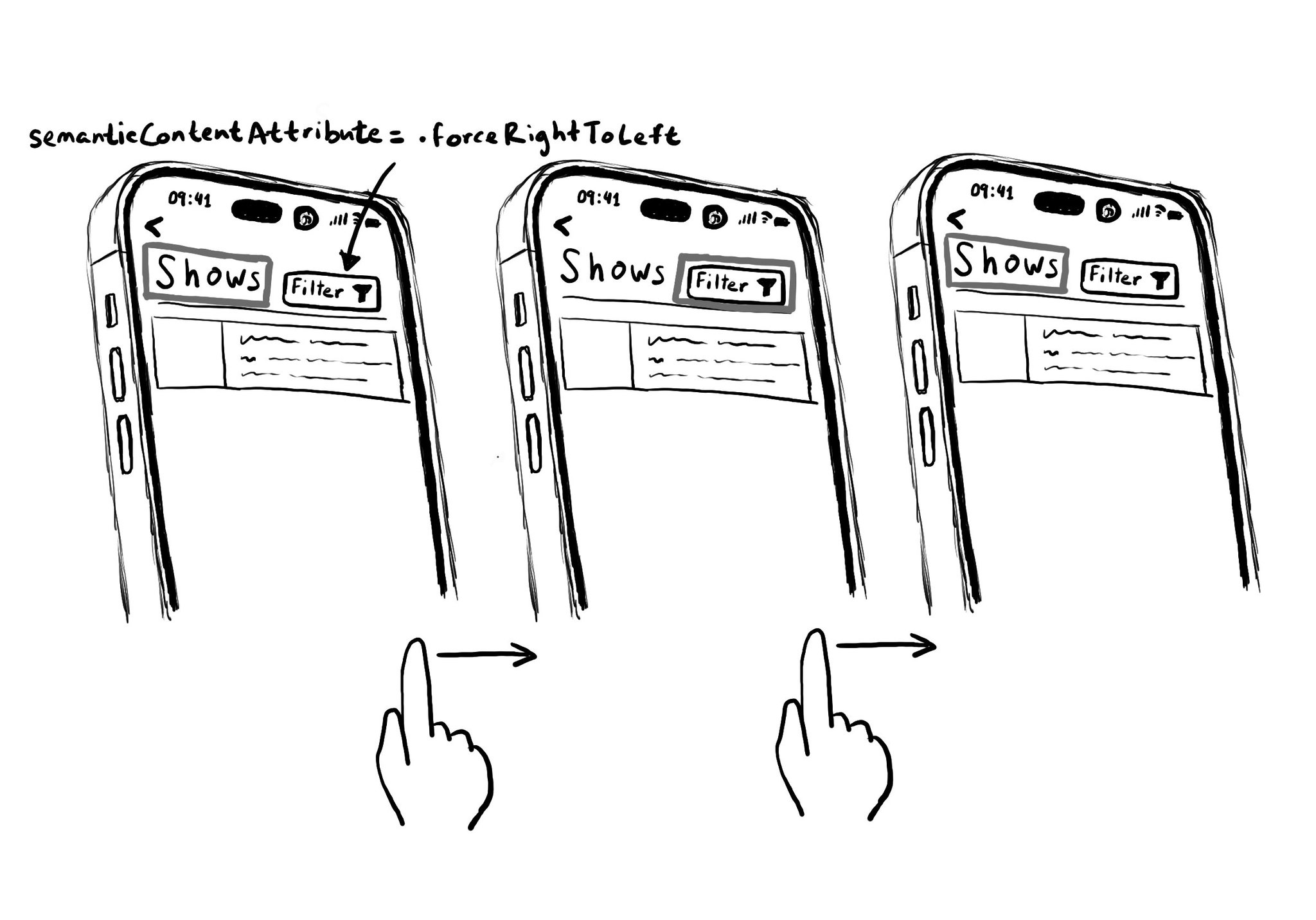

Hacks are accessibility’s worst enemy. An example. There is a ‘trick’ floating on the internet: if you want a button with an icon to the right of the text, set the semantic content attribute to force right to left. Great way to create focus traps.

Content © Daniel Devesa Derksen-Staats — Accessibility up to 11!