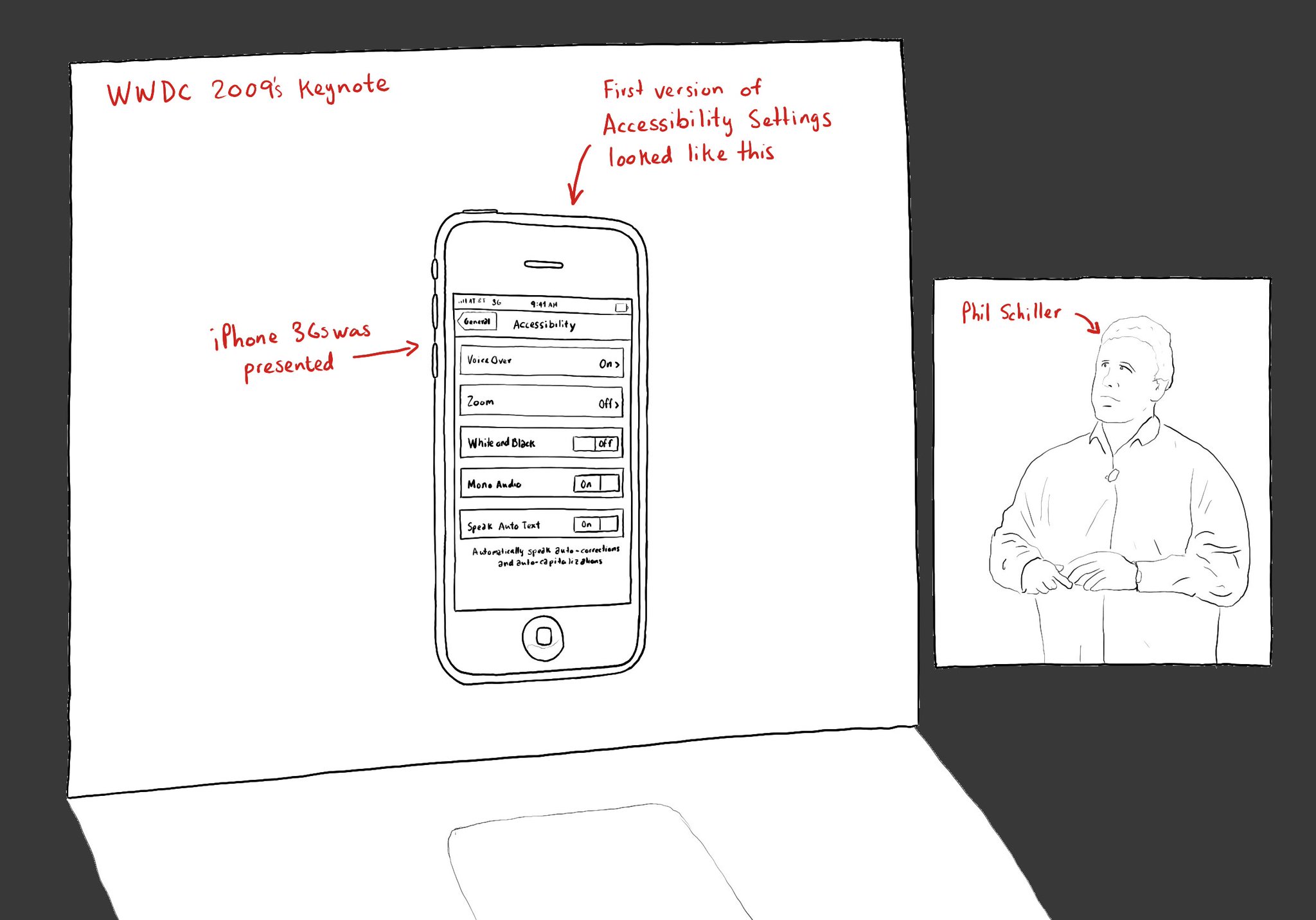

WWDC 2009's keynote, Phil Schiller spoke for 36 seconds, about how the iPhone was, two years later, finally accessible. @shelly tells this amazing story in her audio-documentary "36 Seconds That Changed Everything"

https://www.36seconds.org/2019/06/19/36-seconds-transcript/

"Apple didn’t develop VoiceOver for Mac out of the goodness of their hearts. They developed VoiceOver for Mac because if they didn’t they were going to be in serious trouble with their key market, which was education," @JonathanMosen says.

"They did that thinking a third party would write the screen reader for Mac OS 10, and then when really nobody picked up that mantle to write the screen reader as a third party, Apple stepped in and developed VoiceOver," @jamesdempsey says.

"I borrowed a friend’s phone. It was confirmed. The screen was too small, the background too bright, the text too tiny. For the first time in 20 years, Apple had built a product I couldn’t use. I’m fairly sure I cried about that." @shelly

Four minutes before the two-hour mark, in the midst of a long list of new apps to be included on the iPhone 3GS, @pschiller switched slides, revealing the iPhone Accessibility settings screen. “VoiceOver is on the iPhone. They did it.”

"I bought myself an iPhone at the same time as other people. I didn’t have to wait for a new version of the software, an update to be made, or someone sighted to help me. I could start up VoiceOver and it just worked great." @SteveOfMaine